NeuralChat: deploy a local chatbot within minutes

Let's create your own LLM-driven AI apps with Neural Speed and Intel Extension for Transformers framework.

After showcasing Neural Speed in my past articles, my desire is to share a direct application of the theory: a tool developed using Neural Speed as very first brick, NeuralChat.

NeuralChat is highlighted as “A customizable framework to create your own LLM-driven AI apps within minutes”: it is available as part of the Intel® Extension for Transformers, a Transformer-based toolkit that makes possible to accelerate Generative AI/LLM inference both on CPU and GPU.

The framework stands out for its support to a variety of different hardware platforms: from the basic Intel Core, to the powerful Xeon Scalable processors, passing through the super high performance Gaudi AI processors, Data Center GPU Max Series, and even NVidia GPUs.

NeuralChat is compatible with an incredible assortment of third-party frameworks: it works as extension for the super famous PyTorch, and leverage popular domain libraries (as Hugging Face, Langchain) with their respective extensions.

As we will see later in this article, NeuralChat supports the customized models made with weight-only quantization method I presented in previous articles, plus alternative model customizations through parameter-efficient fine-tuning, and conversion/quantization.

Finally, NeuralChat offers a rich set of plugins to enhance the capabilities of Retrieval-Augmented Generation (RAG) for LLMs, ; in particular, I would like to highlight that NeuralChat supports Haystack v2.0 API through IntelLabs FastRAG.

Installation

We will evaluate together the installation of Neural Chat framework on cheaper and dated Core/Xeon 7th-gen (codename Kaby Lake, 14nm, ~2017) with Ubuntu Server 22.04 LTS installed.

As preliminary steps, we need to install a few requirements at a system level:

~$ apt-get update

~$ apt-get install libsm6 libxext6 libgl1-mesa-glx libgl1-mesa-dev ffmpegThen, make sure you have a safe python virtual environment we will use to experiment with NeuralChat. In this article I am going to use conda to create a separate virtual-env

~$ conda create -n neuralchat python=3.11 pip

~$ conda activate neuralchatNow, let us proceed installing Intel Extension for Transformers (as said, NeuralChat is part of it):

(neuralchat) ~$ pip install intel-extension-for-transformersNote: at this point, I prefer to install intel-extension-for-transformers using pip and not conda, it returns several compatibility issue with python=3.11.

(neuralchat) ~$ pip install fastapi==0.103.2

(neuralchat) ~$ git clone https://github.com/intel/intel-extension-for-transformers

(neuralchat) ~$ cd intel-extension-for-transformer

(neuralchat) ~/intel-extension-for-transformers$ cd intel_extension_for_transformers

(neuralchat) ~/intel-extension-for-transformers/intel_extension_for_transformers$ cd neural-chatIf you notice, in the neural-chat folder there are several requirements*.txt files. We need to install the additional requirements for our specific scenario, as follows:

# For CPU-only device

pip install -r requirements_cpu.txt

# For HPU (Habana® Gaudi® AI Processor) device

pip install -r requirements_hpu.txt

# For XPU (Intel GPU with PyTorch support) device

pip install -r requirements_xpu.txt

# For Windows

pip install -r requirements_win.txt

# For NVIDIA CUDA-enabled device

pip install -r requirements.txt

So, in my case:

(neuralchat) ~/intel-extension-for-transformers/intel_extension_for_transformers/neural-chat$ pip install -r requirements.txtNote: if you notice, neural-compressor and neural_speed are essential mandatory requirements for NeuralChat.

You should now be able to see 4 new binaries available:

neural_engine

neuralchat

neuralchat_client

neuralchat_server

Test the installation

You can check if your installation was successful with this really simple commands:

(neuralchat) ~/intel-extension-for-transformers$ neuralchat predict --query "Tell me more about Generative AI."Check additional option for neuralchat predict with:

(neuralchat) ~$ neuralchat predict -hGetting Started: your first “Hello World!” chatbot

Let’s test the installation of NeuralChat implementing a very basic chatbot query/response. To do that you will need to fulfil some extra requirements to use the method chatbot_build() within the intel_extension_for_transformers.neural_chat module.

(neuralchat) ~/intel-extension-for-transformers$ pip install torch

(neuralchat) ~/intel-extension-for-transformers$ pip install accelerate

(neuralchat) ~/intel-extension-for-transformers$ pip install datasets

(neuralchat) ~/intel-extension-for-transformers$ pip install uvicorn

(neuralchat) ~/intel-extension-for-transformers$ pip install yacs

(neuralchat) ~/intel-extension-for-transformers$ pip install fastchatAt this point, I suggest to use the old-fashion i(nteractive)python:

(neuralchat) ~/intel-extension-for-transformers$ pip install ipython

(neuralchat) ~/intel-extension-for-transformers$ ipython

[1] from intel_extension_for_transformers.neural_chat import build_chatbot

[2] chatbot = build_chatbot()Creating the object chatbot a fine-tuned (base model Mistral-7B-v0.1), pre-trained (with dataset Open-Orca/SlimOrca), pre-converted and pre-quantized model directly from HuggingFace’s repository Intel/neural-chat-7b-v3-1 will be automatically downloaded.

When finished, you should read something like: root:INFO:Model loaded.

Now, let’s test your first prompt with

[4] response = chatbot.predict("What are the top ten scifi movies of all times?")

[5] print("chatbot's response: ", response)You should get a response from the model.

Note: to leave ipython just type quit().

Getting Started: let’s do a bit of RAG

Ok, it’s time to experiment with RAG expandability available as plugin for NeuralChat. Developers already made available within the code, a subdirectory containing everything you need. As always, starting from the requirements

(neuralchat) ~/intel-extension-for-transformers$ cd intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval

(neuralchat) ~/intel-extension-for-transformers/intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval$ pip install -r requirements.txtNow, lets download a pdf file where we’ll look for information to retrieve:

(neuralchat) ~$ mkdir text

(neuralchat) ~$ wget https://rauterberg.employee.id.tue.nl/lecturenotes/DDM110%20CAS/Orwell-1949%201984.pdf -O ./text/Orwell-1984.pdfThen, let’s implement a simple solution step-by-step using ipython:

(neuralchat) ~/intel-extension-for-transformers$ ipython

[1] from intel_extension_for_transformers.neural_chat import build_chatbot, PipelineConfig, plugins

[2] model_path="Intel/neural-chat-7b-v3-1"

[3] plugins.retrieval.args["embedding_model"]="BAAI/bge-base-en-v1.5"

[4] plugins.retrieval.args["persist_directory"]="./output"

[5] plugins.retrieval.args["append"]=False

[6] plugins.retrieval.args["input_path"]="./text/"

[7] plugins.retrieval.enable=True

[8] config = PipelineConfig(model_name_or_path=model_path, plugins=plugins)

[9] chatbot = build_chatbot(config)Output: root:INFO:Model loaded.

Then, you are ready to interrogate the model about the information grabbed from the pdf:

[10] response = chatbot.predict("Who is the main character of 1984?")Did you get the right reply?

This RAG plugin APIs simplify the creation and utilization of models for chatbots; their design serves as easy-to-use extension for LangChain’s users and, in general, a very user-friendly deployment solution.

For file type like txt, html, markdown, doc(x), and pdf you don’t need any particular predefined structure; instead, xls(x), csv, and json/jsonl must adhere to specific structure:

xls(x) ['Questions', 'Answers'], ['question', 'answer', 'link'], or ['context', 'link']

csv ['question', 'correct_answer']

json/jsonl {'content':xxx, 'link':xxx}

Costumized Models

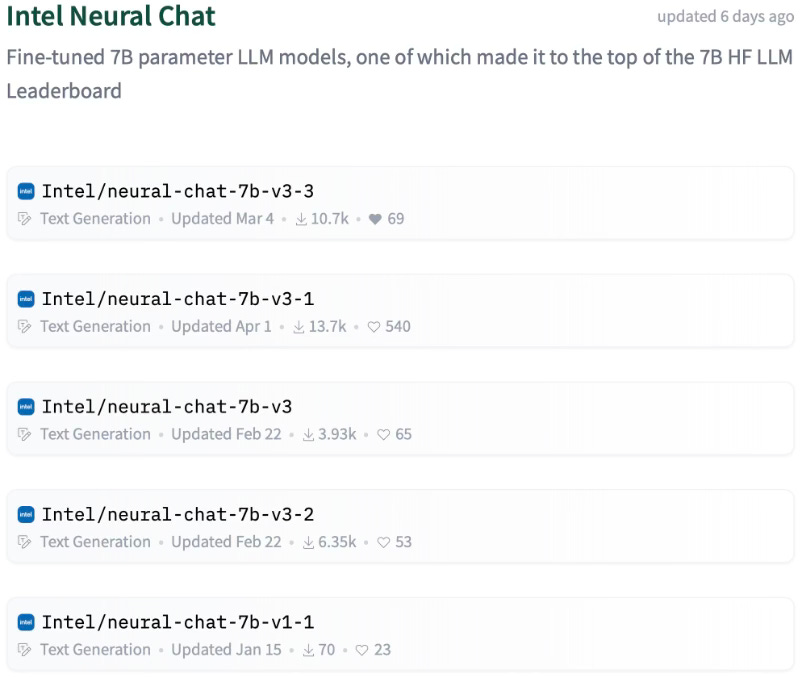

Intel made available a set of models fine-tuned initially from Mistral-7B, then with an iterative approach from the previous version: so, for example, Intel/neural-chat-7b-v3-3 is a tuned version of Intel/neural-chat-7b-v3-1.

They are free, publicly available on HuggingFace and regrouped in a dedicated collection.

Advanced Features

I could talk about NeuralChat for hours, there are tons of different things to cover. Just one article is not enough: so, for now, I’ll leave with a few additional tips to deepen into NeuralChat advanced functionalities.

NeuralChat Server

(neuralchat) ~$ neuralchat_server start -husage: neuralchat_server.start [-h] --config_file CONFIG_FILE [--log_file LOG_FILE]

Where

--config_file CONFIG_FILE pathfile for yaml file configuration of the server

--log_file LOG_FILE pathfile for log file

NeuralChat Server can de deployed using a configuration file in .yaml format to customize the behaviour my modifying the value of its fields: in there, you can set server host, port, model name or pathdir, tozenizer, peft model, cache, etc...

A complete description is available in intel_extension_for_transformers/neural_chat/server/README.md.

NeuralChat Client

(neuralchat) ~$ neuralchat_client helpWhere options are available:

textchat neuralchat_client text chat command

voicechat neuralchat_client voice chat command

finetune neuralchat_client finetuning command

NeuralChat General Binary

(neuralchat) ~$ neuralchat helpWhere options are available:

predict neuralchat text/voice chat command

usage: neuralchat.predict [-h] [--query QUERY] [--model_name_or_path MODEL_NAME_OR_PATH] [--output_audio_path OUTPUT_AUDIO_PATH] [--device DEVICE]

--query QUERY Prompt text or audio file

--model_name_or_path MODEL_NAME_OR_PATH Model name or path

--output_audio_path OUTPUT_AUDIO_PATH Audio output path if the prompt is audio file

--device DEVICE Specify chat on which device

finetune neuralchat finetuning command

usage: neuralchat.finetune [-h] [--base_model BASE_MODEL] [--device DEVICE] [--train_file TRAIN_FILE] [--max_steps MAX_STEPS]

--base_model BASE_MODEL Base model path or name for finetuning

--device DEVICE Specify finetune model on which device

--train_file TRAIN_FILE Specify train file path

--max_steps MAX_STEPS Specify max steps of finetuning

Additional Useful Resources

Advanced Features Documentation

Jupiter Notebooks (.ipynb) to start experimenting

Remember the three steps

Install intel_extension_for_transformers requirements for your machine;

install intel_extension_for_transformers;

install neural_chat specific requirements.

References

Official Intel repository for Extension For Transformers on Github

* This content has been produced by human hands.

License

This work is licensed under Creative Commons Attribution 4.0 International