Igniting 2025 with tons of INT4 Quantizations!

More than 230 repositories with AutoRound, AutoGPTQ and AutoAWQ formatted quantized models...

As we just ignited 2025, and 2024 came to an end, I am proud to share that I have successfully uploaded over 230 quantized SLM/LLM models to my HuggingFace account. These models were entirely quantized using the computational resources of my homelab, achieving approximately 72 TFLOPS of performance-powered solely by "domestic" hardware. You can explore the models here:

»»» https://huggingface.co/fbaldassarri

Completing this initial batch took nearly four months of dedicated effort, relying exclusively on my own resources, on my bare-metal, without any cloud services or external credits. The response has been encouraging, with thousands of downloads from my repositories so far. Looking ahead, I am preparing a new series of quantized models, leveraging diverse opensource architectures and openly sharing the methodologies I adopted behind their preparation.

These repositories will remain freely available on Hugging Face, with the goal of accelerating research and development in open-source, community-driven solutions. My broader aim is to contribute to advancing AI in text generation while promoting a more inclusive and democratic approach.

I am particularly focused on advocating for INT4 quantization as an optimal solution across various use cases. As an advocate for Weight-only-Quantization (WoQ) and SignRound methods, my work emphasizes local, private, and personal inference capabilities of our daily-driver PCs.

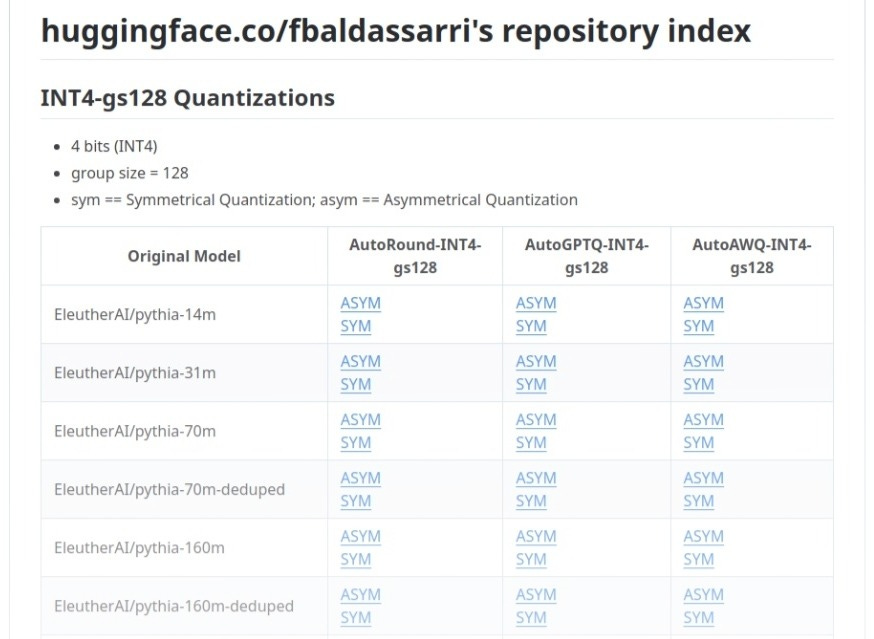

To navigate faster and better my HuggingFace repository, I prepared a sort of index you can find at

»»» github.com/fbaldassarri/fbaldassarri-HF-index

I welcome feedback and collaboration: so, if there are specific open models you’d like to see quantized using formats such as AutoRound, AutoGPTQ, AutoAWQ, or OpenVINO IR for experimentation, feel free to connect with me. I am eager to assist wherever possible.

My Homelab has got a few upgrades!

I invested in adding a few new elements to my homelab: now, the main members of my quantization team are

3 x Intel Xeon 8-thread “kaby-lake” equipped with 64GB of RAM and 1408 CUDA cores each;

1 x Intel Xeon W 16-thread “Skylake” equipped with 64GB of RAM ECC and 1024 CUDA cores;

1 x Intel Core i7-7700k “kaby-lake” quipped with 64GB of RAM and 2176 CUDA cores;

1 x Intel Xeon W 12-thread “cascade-lake” equipped with 256GB of RAM and 4352 CUDA cores;

1 x Intel Core Ultra 9 24-thread “meteor-lake” equipped with 96GB of RAM and 4864 CUDA cores;

1 x Intel Core Ultra 5 18-thread “meteor-lake” equipped with 32GB of RAM.

Excluding the other components of my infrastructure as my personal workstations, NAS, Routers, Kubernetes Cluster, Unraid Cluster, and tons of SSDs/HDDs to store everything!

References and Thanksgivings

Cheng et al., Optimize Weight Rounding via Signed Gradient Descent for the Quantization of LLMs [arXiv:2309.05516v5]

Lastly, I would like to extend my gratitude to Intel’s AI researchers and software engineers for their contributions to open-source frameworks like OpenVINO, NNCF, IPEX, and IPEX-LLM. These tools have been instrumental in maximizing the potential of my hardware. Special thanks go to the Intel AutoRound team, Wenhua Cheng et al., for their invaluable feedback and exceptional tools.

License

This work has been written by human hands, and it is licensed under Creative Commons Attribution 4.0 International.