Compress it! 8bit Post-Training Model Quantization

PyTorch model optimization for high-speed inference via Intel Neural Network Compression Framework.

This week, I want to share with you a few notes about the 8bit quantization technique of a PyTorch model using Neural Network Compression Framework. The final goal is to squeeze the model to obtain excellent performances for a local inference on your device, without spending money on expensive new hardware or cloud API providers.

To achieve this goal we are going to navigate several different steps starting from the download of a PyTorch fine-tuned model, pre-trained on MRPC (the Microsoft Research Paraphrase Corpus).

But, first of all… Let’s prepare our playground where we can mess up a bit!

(base) user@host:~/$ conda create -n nncf-workspace -c intel python=3.11 -y

(base) user@host:~/$ conda activate nncf-workspace

(nncf-workspace) user@host:~/$ python -m pip install --upgrade pipNow, install the Intel Neural Network Compression (NNCF) and Intel OpenVINO™ frameworks:

(nncf-workspace) user@host:~/$ pip install nncf>=2.5.0

(nncf-workspace) user@host:~/$ pip install torch transformers "torch>=2.1" datasets evaluate tqdm --extra-index-url https://download.pytorch.org/whl/cpu

(nncf-workspace) user@host:~/$ pip install openvino>=2024.2.0The Model

For this tutorial, we are going to load a fine tuned HuggingFace BERT model trained with PyTorch for Microsoft Research Paraphrase Corpus (MRPC) task, a corpus consisting in 5,801 sentence pairs collected from newswire articles. The whole set is divided into a training subset (4,076 sentence pairs of which 2,753 are paraphrases) and a test subset (1,725 pairs of which 1,147 are paraphrases).

The idea is to convert the original PyTorch model to the OpenVINO Intermediate Representation (OpenVINO IR) using the Post-Training Quantization API of NNCF library, and then quantize the model to INT8.

As the process to fine-tune a pre-trained BERT model with MRPC as training dataset is quite long, we will take advantage of PyTorch.org, that already prepared all we need in advance for us: https://download.pytorch.org/tutorial/MRPC.zip.

BTW, if you are interested in learning more about the process, you can check this HuggingFace text-classification example that shows details on how to fine-tune a MPRC task with General Language Understanding Evaluation (GLUE) benchmark data.

The Script

As the editor of SubStack isn’t the perfect tool to publish code, I decided to write down a python script making it available on my GitHub profile; it follows the structure of this notebook.

To run it, you just need to follow the following steps:

(nncf-workspace) user@host:~/$ git clone https://github.com/fbaldassarri/nncf-quantization-nlp

(nncf-workspace) user@host:~/$ cd nncf-quantization-nlp

(nncf-workspace) user@host:~/nncf-quantization-nlp$ python main.pyIt performs a series of steps I want to review with you.:

Download and prepare the pre-trained BERT model finetuned with MRPC corpus;

Prepare the dataset defining data loading and accuracy validation functionality;

Convert the original PyTorch model to the OpenVINO Intermediate Representation (OpenVINO IR) with FP32 accuracy;

Prepare the model for quantization;

Run optimization pipeline optimizing the model using NNCF Post-training Quantization API;

Load and test the quantized model;

Finally, comparing the performance of the original Pytorch model, versus the converted IR FP32 and the quantized IR INT8 models.

Optimize model using NNCF Post-training Quantization API

Step 5 is quite critical: Intel OpenVINO’s NNCF consists in a framework of advanced algorithms for inferencing optimization with a minimal accuracy drop: in this case, to optimize BERT model we will apply an 8bit (INT8) quantization in post-training mode, without the fine-tuning pipeline.

Create the data transformation function to transform data items from the original dataset to the model input data

Create a (calibration) dataset for quantization (instance of nncf.Dataset() class)

Run the quantization pipeline using nncf.quantize(model, calibration_dataset) for getting an optimized version of the model

Serialize the model representation (IR) using openvino.save_model() function

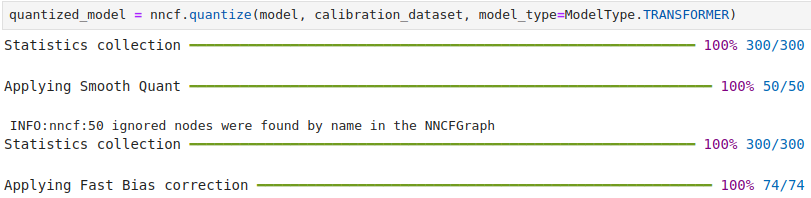

The quantization pipeline is the core of the process: in this step are collected statistics, and the Smooth Quant and Fast Bias Correction are applied.

Then, openvino.save_model(model, 'model.xml') method saves a model into IR files (xml and bin) applying all necessary transformations that usually applied in model conversion flow. Note: floating point weights are compressed to FP16 by default and debug information in model nodes are cleaned up.

Load and Test OpenVINO Model

As I would like to demonstrate that the converted model is really optimized, respect the original model, and ready to perform on low-budget devices, I am going to load the quantized model then I directly compile it for CPU inference. To achieve this, we will use openvino.core.compilemodel() method.

Then, from data_source we randomely pick a pair of sentences (indicated by the index `sample_idx`) and the inference compares these sentences and outputs whether their meaning is the same. For example:

Sentence 1: News that oil producers were lowering their output starting in November exacerbated a sell-off that was already under way on Wall Street .

Sentence 2: News that the Organization of Petroleum Exporting Countries was lowering output starting in November exacerbated a stock sell-off already under way yesterday .

Have the same meaning? Yes

Comparing F1-score of FP32 and INT8 models

F1 scoring (the harmonic mean of the precision and recall) is commonly used as an evaluation metric in binary and multi-class classification and LLM evaluation as it integrates precision and recall into a single metric to gain a better understanding of model performance.

Checking the accuracy of the original model versus the accuracy of the quantized model building a validate(model, data_source) function that evaluate the model on the GLUE dataset, then returns F1 score metric.

Compare Performance of Original vs Converted vs Quantized Model

Comparing the original PyTorch model with OpenVINO converted (`FP32`) and quantized models (`INT8`) to see the difference in performance

Note: measurement are expressed by SPS (Sentences Per Second), which is the same as Frames Per Second (FPS) for images.

PyTorch model on CPU: 0.206 seconds per sentence, SPS: 4.86

IR FP32 model in OpenVINO Runtime/AUTO: 0.068 seconds per sentence, SPS: 14.77

OpenVINO IR INT8 model in OpenVINO Runtime/AUTO: 0.051 seconds per sentence, SPS: 19.75

Evaluating Results

Finally, we will measure the inference performance (estimating deep learning inference performance) of OpenVINO `FP32` and `INT8` models. For this purpose, we are taking advantage of Benchmark Tool in OpenVINO.

The benchmark app provides various options for configuring execution parameters.

(nncf-workspace) user@host:~/nncf-quantization-nlp$ benchmark_app -m ./model/bert_mrpc.xml -shape [1,128],[1,128],[1,128] -d CPU -api sync

(nncf-workspace) user@host:~/nncf-quantization-nlp$ benchmark_app -m ./model/quantized_bert_mrpc.xml -shape [1,128],[1,128],[1,128] -d CPU -api syncReferences

Discover and Download Intel OpenVINO™ Toolkit

How to install Intel OpenVINO on Ubuntu - GitHub.com

Post-training Quantization with NNCF (Basic 8bit, Advanced with Accuracy Control)

Understanding and Applying F1 Score: AI Evaluation Essentials with Hands-On Coding Example

Intel OpenVINO Notebook

License

This work is licensed under Creative Commons Attribution 4.0 International